AI Driven Valuation Models for Commercial Portfolios

Data Sources and Model Training

Data Acquisition and Preprocessing

Gathering relevant data is a crucial first step in any machine learning project. This involves identifying suitable datasets from various sources, such as public repositories, internal databases, or web scraping. The quality and quantity of the data directly impact the model's performance. Thorough data cleaning and preprocessing are essential to remove inconsistencies, handle missing values, and transform data into a suitable format for the chosen machine learning model. This often includes tasks like outlier detection, data normalization, and feature engineering.

Careful consideration must be given to the representativeness of the dataset. A biased dataset can lead to a biased model, resulting in inaccurate predictions or unfair outcomes. Ensuring the dataset reflects the real-world scenario is paramount. Data preprocessing techniques, such as handling imbalanced classes and addressing potential data leakage, are important steps to improve the model's accuracy and reliability. Data validation is also necessary to confirm the integrity and quality of the prepared dataset before model training.

Furthermore, understanding the context and characteristics of the data is critical. This understanding guides the selection of appropriate preprocessing steps and models. A deep dive into the data’s origin, structure, and potential biases is essential. Identifying potential patterns or correlations within the data can also provide valuable insights for further analysis and model development.

Model Selection and Training

Choosing the appropriate machine learning model is a critical step in the process. Different models excel in different tasks, and the selection depends heavily on the problem being solved and the characteristics of the data. Factors such as the type of data (structured, unstructured, or semi-structured), the desired output (classification, regression, or clustering), and the computational resources available all play a role in this decision.

Once a model is selected, the next step is to train it using the prepared dataset. This involves feeding the data to the model and adjusting its internal parameters to minimize errors in predictions. Different training algorithms and techniques can be employed depending on the chosen model. Properly configuring the model’s hyperparameters is essential for optimal performance and avoiding overfitting or underfitting.

Monitoring the training process is also crucial. This includes tracking key metrics like accuracy, precision, recall, and loss. Regular evaluation allows for adjustments to the model or training process to optimize performance. Careful consideration of metrics and evaluation techniques is essential to assess the model's ability to generalize to unseen data. Regular model evaluation is key to prevent overfitting and ensure robust performance.

Overcoming Challenges in AI Valuation

Defining the Scope of AI Valuation

AI-driven valuation models are rapidly transforming how we assess the worth of commercial properties. These models leverage vast datasets, sophisticated algorithms, and machine learning techniques to analyze complex factors influencing property value, such as market trends, location specifics, and comparable sales data. Understanding the specific parameters and data points considered critical to a particular valuation model is crucial to ensuring accuracy and reliability.

While promising, the implementation of these models requires careful consideration of the data quality, the potential biases within the data, and the need for ongoing model validation to ensure consistent accuracy and reliability. This careful evaluation is essential for building trust and confidence in the results.

Data Acquisition and Preparation for AI Models

Accurate and comprehensive data is the cornerstone of any successful AI valuation model. Gathering relevant historical sales data, demographic information, property characteristics, and market trends is essential. However, the sheer volume and variety of data sources can be overwhelming. Efficient data collection strategies and robust data cleaning techniques are essential to ensure that the models are trained on reliable and unbiased information.

Furthermore, preparing this data for use in AI models often requires considerable preprocessing, including data transformation, normalization, and handling missing values. Properly handling these steps is critical for ensuring the model’s accuracy and avoiding potential errors.

Developing and Training AI Valuation Models

Choosing the right AI model architecture is paramount. Different models, such as regression models, deep learning networks, or ensemble methods, are suited to different types of data and valuation challenges. Careful selection and tuning of model parameters, along with the consideration of potential overfitting or underfitting issues, are critical to optimizing performance.

Training these models requires significant computational resources and expertise. The training process involves feeding the prepared data into the selected model and iteratively adjusting its parameters to minimize errors and maximize accuracy. This process demands careful monitoring and evaluation to ensure the model is learning effectively and not overfitting to the training data.

Model Validation and Testing

Rigorous validation and testing are essential to assess the accuracy and reliability of the AI valuation model. This involves evaluating the model's performance on independent datasets not used during training. Methods like cross-validation and hold-out sets are crucial to ensure the model generalizes well to unseen data.

Identifying potential biases in the model's predictions is also critical. This requires analyzing the model's performance across different demographic groups, property types, and market segments. Addressing these biases and mitigating their impact on the valuation results is crucial to ensure fairness and equity.

Overcoming Data Limitations and Bias

Real-world data often contains limitations and biases that can impact the accuracy and fairness of AI valuation models. Missing data, outliers, and inconsistencies in data formats can all affect the model's performance. Developing strategies to handle these challenges is crucial to building robust and reliable models.

Moreover, understanding and addressing potential biases embedded in the data is essential. These biases can stem from historical market trends, discriminatory practices, or simply limited data representation. Techniques for detecting and mitigating these biases are crucial for developing fair and equitable AI valuation models.

Ensuring Transparency and Interpretability

Transparency and interpretability are key factors in building trust and confidence in AI-driven valuation models. Understanding how the model arrives at its valuation conclusions is essential for stakeholders to comprehend the reasoning behind the results. Techniques to improve model transparency, such as explainable AI (XAI) methods, can help bridge the gap between complex models and human understanding.

Furthermore, clear documentation of the model's development process, data sources, and validation results is essential for reproducibility and auditing purposes. This documentation ensures the model's reliability and can be vital in cases where the model's valuation is challenged.

The Future of AI in Commercial Real Estate Valuation

The Rise of AI-Powered Tools in Commercial Real Estate

The integration of artificial intelligence (AI) into commercial real estate is rapidly transforming the industry, offering unprecedented opportunities for efficiency and profitability. From automating routine tasks to providing sophisticated market analyses, AI-driven tools are poised to reshape how real estate professionals operate. This technological advancement is not just about streamlining existing processes; it's about unlocking new insights and possibilities within the market. This includes predicting market trends with greater accuracy, identifying optimal investment opportunities, and personalizing the client experience.

AI-powered platforms are already demonstrating their potential by providing real-time data analysis, allowing for quicker decision-making. These platforms can process vast amounts of data, including market trends, property valuations, and tenant demographics, to identify emerging opportunities and risks. This ability to analyze massive datasets with speed and precision is revolutionizing how commercial real estate professionals approach their work. The use of AI in commercial real estate is not just a trend; it's a fundamental shift towards a more data-driven and efficient future.

Enhanced Efficiency and Personalized Experiences

One of the most significant impacts of AI in commercial real estate is the enhancement of efficiency. AI algorithms can automate tasks such as property inspections, lease negotiations, and even tenant screening, freeing up valuable time for real estate professionals to focus on strategic initiatives and client interactions. This automation not only increases productivity but also reduces the likelihood of human error.

Furthermore, AI allows for a more personalized experience for clients. By analyzing individual preferences and needs, AI-powered systems can tailor property recommendations and services to each client, leading to improved satisfaction and stronger client relationships. This personalization significantly improves the client experience, fostering trust and loyalty.

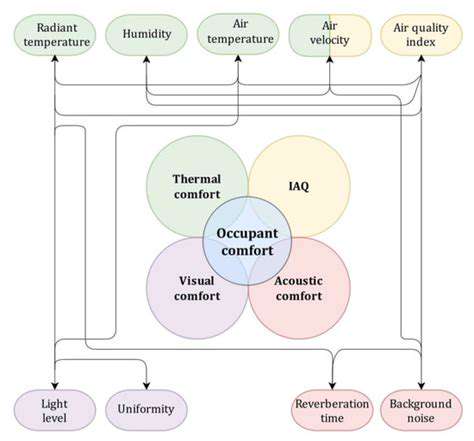

AI-driven tools can also provide valuable insights into tenant behavior and preferences, enabling property owners to optimize space utilization and maximize profitability. By understanding the needs of tenants, owners can create more desirable and productive environments, leading to increased occupancy rates and higher rental yields. This data-driven approach is pivotal to long-term success in the evolving commercial real estate landscape.

The integration of AI also facilitates more streamlined communication, leading to better collaboration between various stakeholders in the commercial real estate ecosystem. AI-powered chatbots and virtual assistants can handle routine inquiries, freeing up human agents to address complex issues and engage in strategic conversations.

Read more about AI Driven Valuation Models for Commercial Portfolios

Hot Recommendations

- AI in Property Marketing: Virtual Tours and VR

- Water Management Solutions for Sustainable Real Estate

- IoT Solutions for Smart Building Energy Management

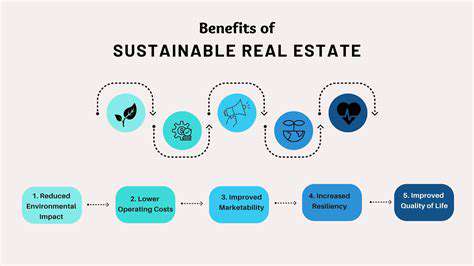

- Sustainable Real Estate: Building a Greener Tomorrow

- Sustainable Real Estate: From Concept to Community

- AI Driven Due Diligence for Large Scale Developments

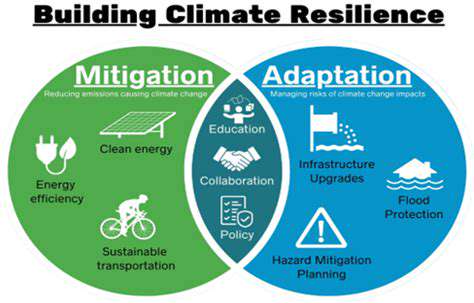

- Real Estate Sector and Global Climate Agreements

- Smart Buildings: The Key to Smarter Property Management

- Zero Waste Buildings: A Sustainable Real Estate Goal

- Understanding Climate Risk in Real Estate Financing