AI in Real Estate Transaction Automation: End to End

Introduction: The Digital Transformation of Property Markets

The Game-Changing Nature of Intelligent Systems

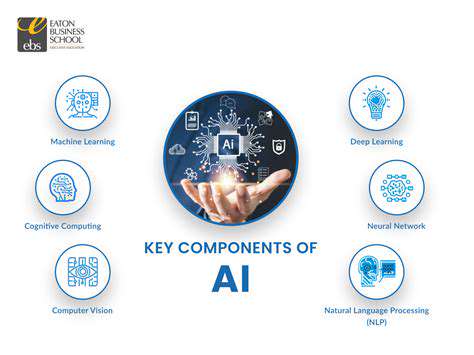

Modern property markets are undergoing a seismic shift as computational systems become increasingly sophisticated. These advanced tools analyze enormous datasets, uncover hidden patterns, and automate complex procedures that previously required human judgment. This technological evolution is fundamentally altering how we evaluate, purchase, and manage real estate assets. The implications extend far beyond simple automation, touching every aspect of property transactions from initial listings to final settlements.

The convergence of powerful computing resources with innovative algorithmic approaches has created unprecedented opportunities. We're witnessing a paradigm shift where data-driven insights increasingly guide major property decisions, complementing traditional human expertise with quantitative precision.

Sector-Wide Impacts of Computational Advancements

Property valuation methods have been particularly transformed, with systems now capable of analyzing hundreds of variables simultaneously. Mortgage approvals benefit from more accurate risk assessments, while contract reviews that once took days can now be completed in minutes. The cumulative effect is a dramatic acceleration of transaction timelines and improved accuracy throughout the process.

Urban planning and development are also being reshaped, with predictive modeling informing infrastructure investments and zoning decisions. These tools help stakeholders anticipate market trends and make more informed long-term decisions about property utilization.

Balancing Innovation with Responsibility

As these technologies proliferate, important questions emerge about equitable access and fair implementation. The potential for algorithmic bias in property valuations requires careful monitoring, while data privacy concerns demand robust security frameworks. Thoughtful governance will be essential to ensure these tools benefit all market participants.

Transparency in decision-making processes remains crucial, particularly when automated systems influence major financial decisions. Establishing clear accountability mechanisms helps maintain trust as these technologies become more pervasive in property transactions.

Future Directions in Property Technology

Emerging capabilities in natural language processing are streamlining document analysis, while computer vision enhances property inspections. These innovations promise to further reduce friction in real estate transactions while improving accuracy. The integration of these technologies with blockchain systems may soon enable entirely new transaction models.

Looking ahead, we can anticipate more personalized property recommendations and dynamic pricing models that respond to real-time market conditions. The most successful implementations will likely combine cutting-edge technology with deep human expertise, creating hybrid systems that leverage the strengths of both.

Overcoming Adoption Hurdles

Widespread implementation faces several practical challenges, from legacy system integration to workforce training needs. Cost considerations remain significant, though the long-term efficiency gains often justify the initial investment. Addressing these barriers requires coordinated effort across the industry.

Developing standardized frameworks for system interoperability could accelerate adoption, while targeted training programs can help professionals adapt to these new tools. The ultimate goal is creating technological solutions that enhance rather than replace human expertise in property transactions.

Core Elements of Intelligent Property Transaction Systems

Data Collection and Preparation

High-quality information forms the foundation of effective property analysis systems. Comprehensive data strategies must account for diverse property characteristics, market conditions, and environmental factors. This involves gathering information from multiple sources, validating its accuracy, and structuring it for analytical purposes. Special attention must be paid to handling incomplete records and ensuring balanced representation across different property types and locations.

Data refinement techniques like normalization and feature selection enhance the system's ability to identify meaningful patterns. These preprocessing steps significantly impact the quality of subsequent analyses and recommendations.

System Architecture and Training

Selecting appropriate analytical models depends on specific use cases and available data characteristics. The ideal approach varies significantly between valuation models, risk assessment tools, and market prediction systems. Each application requires careful consideration of model complexity, interpretability needs, and computational requirements.

The training process involves iterative refinement to optimize performance, with particular attention to preventing overfitting. This ensures the system maintains accuracy when applied to new properties and market conditions beyond its training data.

Performance Assessment

Rigorous testing protocols are essential before deploying any analytical system. Comprehensive evaluation using appropriate metrics reveals how well the system performs across different property types and market segments. Techniques like cross-validation provide confidence that the system will generalize effectively to novel situations.

Implementation and Oversight

Deploying these systems requires careful integration with existing workflows. Successful implementations consider both technical compatibility and user experience factors. Continuous monitoring ensures sustained performance as market conditions evolve, with mechanisms for periodic recalibration as needed.

Explainable Decision-Making

In high-stakes financial decisions, understanding system outputs is as important as their accuracy. Interpretability features allow users to comprehend the factors influencing recommendations, building trust and facilitating human oversight. This is particularly crucial for valuation models where transparency directly impacts regulatory compliance.

System Integration

Seamless incorporation into existing platforms requires thoughtful architecture design. Effective integration strategies minimize disruption while maximizing the utility of new analytical capabilities. Ongoing maintenance ensures the system remains current with changing regulations and market practices.

Ethical Implementation

Responsible deployment requires proactive attention to potential biases and fairness concerns. Regular audits can identify and address unintended discriminatory patterns in system outputs. Clear ethical guidelines should govern all aspects of system development and deployment in the property sector.

Practical Challenges in Implementing Intelligent Transaction Systems

Information Security Considerations

Implementing advanced analytical systems demands stringent data protection measures given the sensitive nature of property transaction information. Compliance with evolving privacy regulations requires ongoing attention to data handling practices and security protocols.

Potential biases in valuation models necessitate careful testing across diverse property markets. Regular audits help ensure these systems don't inadvertently perpetuate historical inequities in property valuation and financing.

Addressing Algorithmic Fairness

Historical data patterns may reflect past market biases that shouldn't influence future decisions. Comprehensive testing across different demographics and neighborhoods helps identify and correct these issues before system deployment.

Developing mitigation strategies requires collaboration between technical teams and domain experts. This ensures solutions are both technically sound and practically effective in real-world property markets.

Legacy System Compatibility

Many real estate firms operate on older technology platforms that weren't designed for modern analytical tools. Gradual modernization strategies can bridge this gap while maintaining operational continuity.

Effective integration planning identifies critical data flows and workflow touchpoints. This targeted approach minimizes disruption while maximizing the value of new system capabilities.

Cost-Benefit Analysis

Justifying the investment requires clear metrics that demonstrate operational improvements. These might include reduced transaction times, lower error rates, or improved customer satisfaction scores that translate to competitive advantage.

Regulatory Compliance

The legal landscape for these technologies continues to evolve rapidly. Proactive compliance strategies anticipate future regulatory directions while meeting current requirements.

User Acceptance

Effective training programs should emphasize practical benefits and hands-on experience. Addressing user concerns early in the implementation process fosters more rapid and complete adoption across organizations.

Maintaining Transparency

Clear documentation of system operations and decision factors builds user confidence. Establishing review processes for contested decisions maintains appropriate human oversight of automated systems.

Read more about AI in Real Estate Transaction Automation: End to End

Hot Recommendations

- AI in Property Marketing: Virtual Tours and VR

- Water Management Solutions for Sustainable Real Estate

- IoT Solutions for Smart Building Energy Management

- Sustainable Real Estate: Building a Greener Tomorrow

- Sustainable Real Estate: From Concept to Community

- AI Driven Due Diligence for Large Scale Developments

- Real Estate Sector and Global Climate Agreements

- Smart Buildings: The Key to Smarter Property Management

- Zero Waste Buildings: A Sustainable Real Estate Goal

- Understanding Climate Risk in Real Estate Financing