AI Driven Valuation: Precision in Real Estate Pricing

Core Techniques in Predictive Analysis

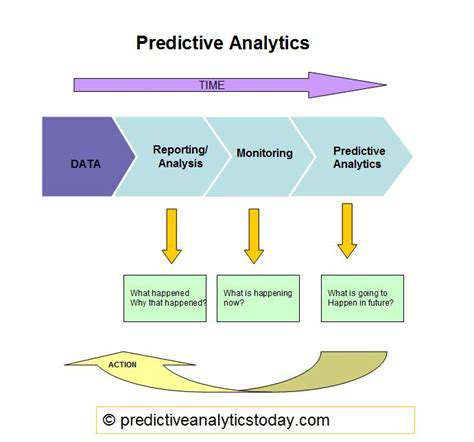

At its heart, predictive modeling represents the intersection of statistics and machine intelligence, crafting algorithms that project future scenarios from past patterns. By analyzing historical datasets, these models uncover hidden correlations that human analysts might miss. For decision-makers, this capability transforms raw data into strategic foresight, enabling proactive rather than reactive management.

Data Refinement Process

The foundation of any reliable model lies in meticulous data preparation. Analysts must scrub datasets of irregularities, address gaps in information, and normalize disparate data formats. Proper handling of anomalies and incomplete records separates insightful models from flawed projections. Feature engineering further enhances models by creating new predictive variables through clever combinations of existing data points.

Choosing and Training Models

Selecting the right algorithmic approach depends on multiple factors: data complexity, required precision, and available computing power. From straightforward regression models to intricate deep learning networks, each method offers distinct advantages. The training phase breathes life into these models, allowing them to discern meaningful patterns from prepared datasets. This learning process forms the core of predictive capability.

Assessing Model Performance

Rigorous testing validates whether models can generalize beyond their training data. Metrics like precision-recall balance and classification accuracy reveal predictive strengths and weaknesses. Perhaps most crucially, evaluation must include validation against completely unseen data to prevent misleading results from over-optimized models. This step ensures practical utility rather than theoretical perfection.

Extracting Actionable Insights

The true value emerges when organizations translate model outputs into strategic actions. Understanding which factors most influence predictions allows businesses to focus on key variables. This interpretation phase bridges technical analysis with executive decision-making, turning abstract numbers into concrete strategies. When done well, it reveals opportunities hidden within complex datasets.

Operational Implementation

Moving from development to deployment requires careful integration with existing systems. Ongoing performance tracking becomes essential as real-world conditions inevitably diverge from training environments. Regular updates with fresh data maintain relevance, while monitoring protocols catch performance degradation before it impacts decisions. This lifecycle approach sustains predictive value over time.

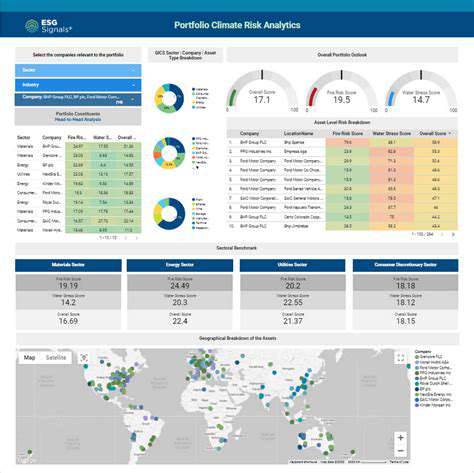

Industry Applications

From retail inventory optimization to financial risk assessment, predictive methods revolutionize operations across sectors. The capacity to anticipate rather than react provides competitive advantage in fast-moving markets. Healthcare applications range from epidemic forecasting to personalized treatment plans, demonstrating the technology's versatility in improving outcomes and efficiency.

Modernizing Valuation: Speed and Precision

Automated Data Processing

Intelligent systems now gather and filter valuation-relevant information from countless sources, from financial disclosures to macroeconomic indicators. This automation slashes the time traditionally spent on manual data collection while improving coverage. By eliminating human oversight in initial screening, these tools allow professionals to concentrate on nuanced interpretation rather than data wrangling.

Advanced Projection Methods

Machine learning identifies subtle patterns across historical valuation data that conventional approaches might miss. These algorithms account for complex interactions between variables, producing forecasts that reflect real-world complexity. Scenario analysis becomes more robust as models simulate various economic conditions, providing a spectrum of potential outcomes rather than single-point estimates.

Dynamic Value Tracking

In volatile markets, static valuations quickly become obsolete. Continuous monitoring systems adjust assessments in response to market movements, news events, and economic shifts. This real-time responsiveness proves particularly valuable for assets sensitive to quick-changing conditions, ensuring stakeholders always work with current estimates.

Standardized Evaluation Frameworks

Algorithmic valuation eliminates inconsistencies inherent in human judgment. By applying uniform criteria across all assessments, these systems produce comparable results regardless of which analyst runs the analysis. Detailed audit trails document every calculation, creating transparency that builds confidence among all parties involved in valuation-dependent decisions.

Cost-Efficient Processes

The automation revolution dramatically reduces both the time and expense associated with professional valuations. Smaller organizations gain access to sophisticated analysis previously available only to well-resourced firms. This democratization of valuation technology enables data-driven decision-making across the business spectrum.

Collaborative Assessment Platforms

Cloud-based valuation tools allow geographically dispersed teams to work on shared analyses simultaneously. Real-time data sharing and annotation features facilitate productive discussions about assumptions and methodologies. These collaborative environments help align stakeholders by making the valuation process more inclusive and transparent.

Real Estate Valuation's Digital Transformation

Next-Generation Property Valuation

Modern valuation platforms synthesize information from unconventional sources, including satellite imagery, neighborhood foot traffic patterns, and even local school district performance metrics. This multidimensional analysis captures factors that traditional appraisals might neglect, providing a more complete picture of property value drivers in today's complex real estate markets.

Unified Data Ecosystems

Breakthroughs in data integration allow valuation systems to incorporate dozens of relevant datasets into a single analytical framework. Municipal records, demographic shifts, transportation developments, and environmental factors all feed into comprehensive valuation models. This holistic approach captures the interconnected nature of real estate value determinants.

Accelerated Appraisal Cycles

What once required weeks of research now happens in hours through automated valuation models. Rapid analysis benefits all market participants - sellers receive timely estimates, buyers make quicker decisions, and lenders accelerate approval processes. This speed advantage becomes increasingly valuable in competitive housing markets where timing affects outcomes.

Responsive Valuation Adjustments

Smart valuation systems monitor leading indicators - from mortgage rate changes to local employment trends - adjusting estimates as conditions evolve. This dynamic approach proves particularly valuable during periods of market volatility, ensuring valuations reflect current realities rather than historical averages.

Streamlined Workflows

From initial data collection to final report generation, automation handles routine valuation tasks with precision. Standardized processes reduce variability between appraisals while minimizing opportunities for human error. The resulting consistency improves reliability for all parties relying on valuation conclusions.

Explainable AI Valuation

While leveraging complex algorithms, modern systems provide clear explanations of how specific factors influence each valuation. Interactive dashboards allow users to explore the weight given to various criteria, building trust through transparency. This explainability bridges the gap between technical modeling and practical decision-making.

Addressing Implementation Challenges

As with any disruptive technology, adoption requires overcoming legitimate concerns. Data quality verification protocols ensure input accuracy, while bias detection algorithms identify and correct for skewed datasets. Continuous model validation against actual market outcomes maintains system credibility, creating a feedback loop that improves accuracy over time.

Read more about AI Driven Valuation: Precision in Real Estate Pricing

Hot Recommendations

- AI in Property Marketing: Virtual Tours and VR

- Water Management Solutions for Sustainable Real Estate

- IoT Solutions for Smart Building Energy Management

- Sustainable Real Estate: Building a Greener Tomorrow

- Sustainable Real Estate: From Concept to Community

- AI Driven Due Diligence for Large Scale Developments

- Real Estate Sector and Global Climate Agreements

- Smart Buildings: The Key to Smarter Property Management

- Zero Waste Buildings: A Sustainable Real Estate Goal

- Understanding Climate Risk in Real Estate Financing