AI Powered Valuation: Debunking Myths and Highlighting the Real Benefits of AI

Data-Driven Precision: Improving Accuracy and Consistency

Data Collection and Integration

Establishing a comprehensive data collection framework forms the bedrock of precision-driven strategies. Rather than just identifying data points, this involves curating relevant datasets while maintaining rigorous quality checks. Data integrity isn't optional - it's the foundation that determines whether subsequent analysis holds water. Real-world implementation requires constant vigilance against data drift and systematic biases that could skew results.

Merging datasets from disparate sources - whether internal records, third-party APIs, or IoT devices - presents both opportunities and challenges. The magic happens when we transform raw, conflicting data into a harmonized knowledge base that tells a coherent story. This often requires sophisticated ETL (Extract, Transform, Load) pipelines and data mapping protocols.

Defining Precision Metrics

Precision without measurable outcomes is just theoretical. In practice, we need to establish concrete KPIs that reflect the true objectives. For manufacturers, this might translate to tracking first-pass yield improvements or reduced rework rates. What gets measured gets managed - which is why defining these success metrics early creates accountability.

Quantifiable benchmarks serve as our compass, allowing course corrections when processes veer off track. This measurement framework evolves iteratively as we gain operational insights and refine our understanding of what good looks like.

Developing Predictive Models

Modern predictive analytics combine statistical rigor with machine learning's pattern recognition capabilities. Garbage in, garbage out remains painfully true - model accuracy directly correlates with training data quality. That's why data scientists spend 80% of their time preparing data before modeling.

From anticipating machine failures to forecasting sales trends, these models shine when predicting complex, multivariate outcomes. Their real value lies in shifting from reactive firefighting to proactive optimization - catching issues before they escalate.

Implementing and Monitoring Interventions

Turning insights into action requires meticulous planning. Successful implementation hinges on cross-functional alignment - when engineering, operations, and leadership row in the same direction. Change management becomes critical, especially when introducing data-driven processes to traditionally intuitive operations.

Continuous monitoring acts as our early warning system. It's not enough to deploy solutions - we need mechanisms to assess their real-world impact and catch unintended consequences quickly.

Analyzing Results and Refining Strategies

The analysis phase separates vanity metrics from genuine insights. Sophisticated visualization tools help cut through the noise, revealing patterns that raw numbers might obscure. This is where we validate hypotheses and pressure-test assumptions against hard evidence.

Continuous Improvement and Adaptation

Data-driven operations never truly finish. Market conditions evolve, technologies advance, and customer expectations shift - making continuous improvement non-negotiable. The most successful organizations institutionalize learning loops that bake insights back into processes.

This iterative approach creates a flywheel effect - each cycle builds on previous learnings, compounding operational advantages over time. It's less about perfection and more about consistent, measurable progress.

Authenticity isn't performative - it's about aligning external actions with internal values in ways that withstand scrutiny. This genuine alignment builds the trust capital that fuels lasting business relationships. When organizations demonstrate authentic consistency, they create gravitational pull - attracting partners and customers who share their values.

The Future of Valuation: Embracing AI for Enhanced Insights

AI-Driven Valuation Models: A Paradigm Shift

The valuation industry stands at an inflection point. Where traditional methods relied on backward-looking multiples and rules of thumb, AI introduces forward-looking, multidimensional analysis. This isn't incremental improvement - it's fundamentally redefining how we assess worth in an increasingly complex economy.

Data-Driven Insights: Beyond Historical Trends

Modern AI systems digest everything from satellite imagery to supply chain data points, creating valuation mosaics impossible to assemble manually. Suddenly, obscure but predictive indicators - like parking lot fullness or social media sentiment - become quantifiable inputs rather than anecdotal afterthoughts.

Enhanced Accuracy and Efficiency: Speed and Precision

AI doesn't just work faster than human analysts - it works differently. Neural networks spot nonlinear relationships in datasets that would overwhelm spreadsheet models. The result? Valuations that better reflect real-world complexities, delivered in hours rather than weeks.

Predictive Capabilities: Anticipating Future Growth

The most valuable aspect might be AI's ability to model multiple future scenarios simultaneously. Traditional DCF models struggle with uncertainty, while machine learning can weight thousands of potential outcomes based on historical precedents and leading indicators.

Overcoming Limitations of Traditional Methods

Human analysts inevitably bring cognitive biases - recency bias, confirmation bias, anchoring. AI systems aren't immune to bias, but their parameters can be explicitly tested and adjusted. This makes AI valuations more consistently objective across different analysts and market conditions.

Ethical Considerations and Responsible Implementation

The path forward requires guardrails. Explainable AI techniques must demonstrate how models reach conclusions, while ongoing audits should monitor for dataset drift or unintended bias. The goal isn't replacing human judgment, but augmenting it with superior information processing - creating a collaborative intelligence greater than either could achieve alone.

Read more about AI Powered Valuation: Debunking Myths and Highlighting the Real Benefits of AI

Hot Recommendations

- AI in Property Marketing: Virtual Tours and VR

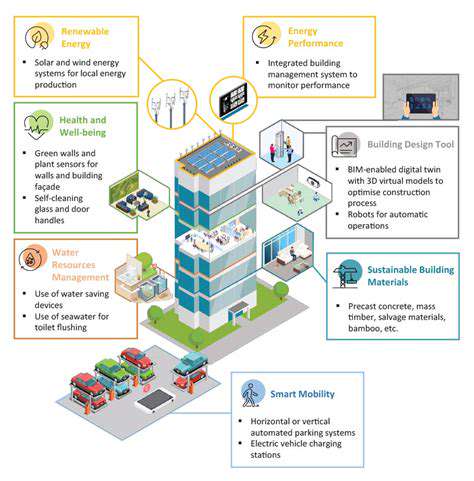

- Water Management Solutions for Sustainable Real Estate

- IoT Solutions for Smart Building Energy Management

- Sustainable Real Estate: Building a Greener Tomorrow

- Sustainable Real Estate: From Concept to Community

- AI Driven Due Diligence for Large Scale Developments

- Real Estate Sector and Global Climate Agreements

- Smart Buildings: The Key to Smarter Property Management

- Zero Waste Buildings: A Sustainable Real Estate Goal

- Understanding Climate Risk in Real Estate Financing