AI Driven Predictive Analytics for Property Values

Challenges and Considerations in Implementing AI

Data Quality and Availability

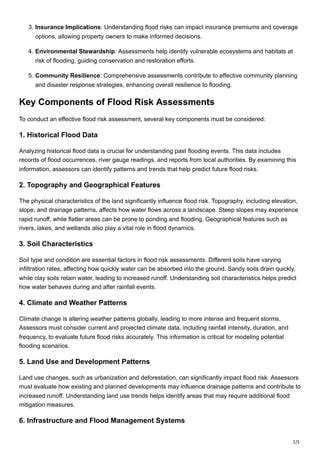

A crucial aspect of successful AI implementation is the quality and availability of the data used to train and validate AI models. Insufficient or inaccurate data can lead to biased or unreliable predictions, impacting the accuracy and usefulness of the entire predictive analytics system. Ensuring data completeness, consistency, and accuracy is a significant upfront investment, requiring careful data cleaning, validation, and potentially, data augmentation techniques. This involves identifying and addressing missing values, outliers, and inconsistencies in the dataset, which can significantly impact the model's performance and ultimately the reliability of the predictions.

Furthermore, the volume and velocity of data can pose a significant challenge. Modern AI models often require massive datasets to learn effectively. If the data isn't readily available or isn't collected at a sufficient rate to keep up with changing business conditions, the model's predictive power can be severely limited. Strategies for managing large datasets, including data warehousing, cloud-based storage solutions, and effective data pipelines, are essential for successful implementation.

Model Selection and Training

Choosing the right AI model for a specific predictive task is critical. There's no one-size-fits-all solution. Different models excel at different types of data and tasks. A thorough understanding of the data characteristics, the desired outcome, and the limitations of various algorithms is paramount. This includes evaluating linear regression, decision trees, support vector machines, neural networks, and ensemble methods, considering their strengths and weaknesses in relation to the specific business problem.

The training process itself can be complex and time-consuming. Appropriate training data partitioning, model hyperparameter tuning, and validation strategies are essential for avoiding overfitting and underfitting. Overfitting occurs when a model learns the training data too well, capturing noise and irrelevant patterns. Underfitting occurs when the model is too simple to capture the underlying relationships in the data. Finding the optimal balance is key to achieving accurate and reliable predictions.

Interpretability and Explainability

Many AI models, particularly deep learning models, can be black boxes, making it difficult to understand how they arrive at their predictions. This lack of interpretability can be a significant concern, especially in critical applications where understanding the reasoning behind a prediction is essential. Explaining the decision-making process of these models is crucial for building trust and ensuring accountability. Techniques like feature importance analysis and visualization can help shed light on the factors driving the model's predictions.

Transparency and explainability are paramount for gaining confidence in the results. Stakeholders need to understand why a particular prediction was made, and this requires tools and techniques to demystify the model's inner workings. Without this, it is difficult for users to accept or rely on the predictions, hindering the adoption and integration of AI solutions within the organization.

Integration and Deployment

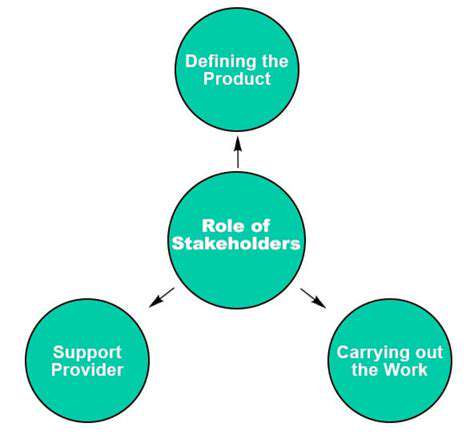

Integrating AI models into existing systems can be challenging. Careful planning and consideration of the infrastructure, workflows, and data pipelines are necessary to ensure seamless integration. This includes developing robust APIs, establishing secure data access protocols, and ensuring compatibility with existing software. A well-defined deployment strategy is critical for successful long-term implementation.

Ethical Considerations

AI-driven predictive analytics raise important ethical considerations. Biases in the data can lead to discriminatory outcomes. Fairness, accountability, and transparency are key factors to consider throughout the entire process. Developing and deploying AI models that are equitable and unbiased requires careful attention to data quality, model selection, and ongoing monitoring. Careful consideration of potential biases and their impact on the target population is essential to ensure fairness and avoid perpetuating existing inequalities.

Read more about AI Driven Predictive Analytics for Property Values

Hot Recommendations

- AI in Property Marketing: Virtual Tours and VR

- Water Management Solutions for Sustainable Real Estate

- IoT Solutions for Smart Building Energy Management

- Sustainable Real Estate: Building a Greener Tomorrow

- Sustainable Real Estate: From Concept to Community

- AI Driven Due Diligence for Large Scale Developments

- Real Estate Sector and Global Climate Agreements

- Smart Buildings: The Key to Smarter Property Management

- Zero Waste Buildings: A Sustainable Real Estate Goal

- Understanding Climate Risk in Real Estate Financing